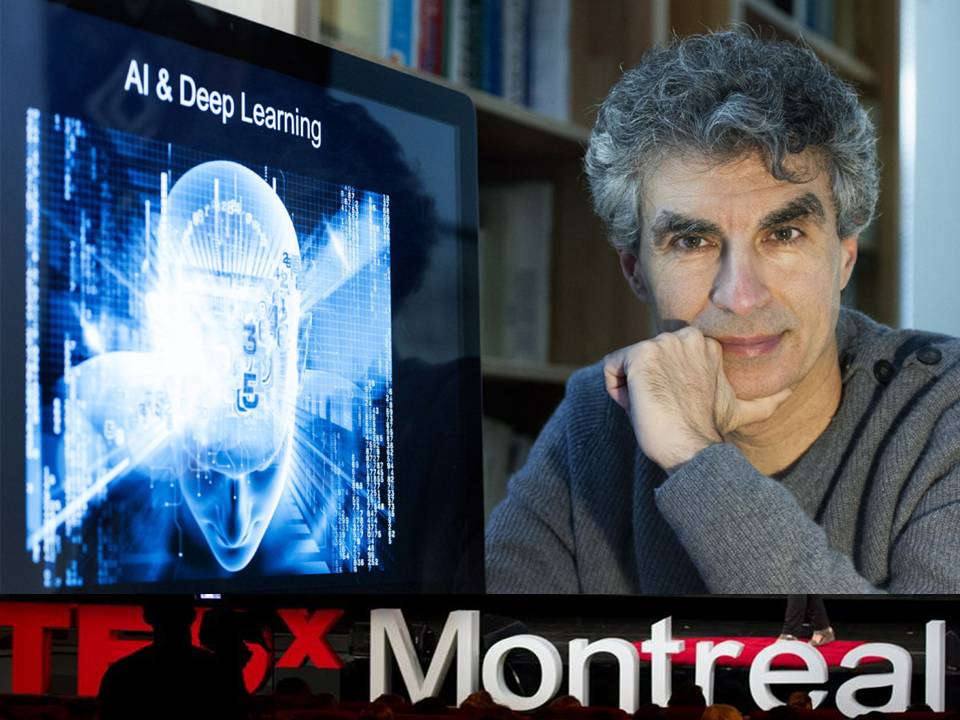

It doesn’t matter if you are beginner or new to machine learning or advanced researcher in the field of deep learning methods and their application, everybody can benefit of Lex Fridman’s course on Deep Learning for Self-Driving Cars.

If you are interested in this course, you can go to http://selfdrivingcars.mit.edu/ and Register an account on the site to stay up-to-date. The material for the course is free and open to the public.

In order to reach more people who are interested in Deep learning, machine learning and artificial intelligence, I would like to share this course material on my website.

This lecture introduces types of machine learning, the neuron as a computational building block for neural nets, q-learning, deep reinforcement learning, and the DeepTraffic simulation that utilizes deep reinforcement learning for the motion planning task.

You can reach slides here..

deep-reinforcement-learning-for-motion-planning

Learning to Move: Deep Reinforcement Learning for Motion Planning

DeepTraffic: Solving Traffic with Deep Reinforcement Learning

Types of machine learning:

*Supervised Learning

*Unsupervised Learning

*Semi-Supervised Learning

*Reinforcement Learning

Americans spend 8 billion hours stuck in traffic every year.

Deep neural networks can help!

Philosophical Motivation for Reinforcement Learning

Takeaway from Supervised Learning:

Neural networks are great at memorization and not (yet) great at reasoning.

Hope for Reinforcement Learning:

Brute-force propagation of outcomes to knowledge about states and actions. This is a kind of brute-force “reasoning”.

Reinforcement learning is a general-purpose framework for decision-making:

*An agent operates in an environment: Atari Breakout

*An agent has the capacity to act

*Each action influences the agent’s future state

*Success is measured by a reward signal

*Goal is to select actions to maximize future reward

Philosophical Motivation for Deep Reinforcement Learning

Takeaway from Supervised Learning:

Neural networks are great at memorization and not (yet) great at reasoning.

Hope for Reinforcement Learning: Brute-force propagation of outcomes to knowledge about states and actions. This is a kind of brute-force “reasoning”.

Hope for Deep Learning + Reinforcement Learning: General purpose artificial intelligence through efficient generalizable learning of the optimal thing to do given a formalized set of actions and states (possibly huge).

Lex Fridman is a Postdoctoral Associate at the MIT AgeLab. He received his BS, MS, and PhD from Drexel University where he worked on applications of machine learning and numerical optimization techniques in a number of fields including robotics, ad hoc wireless networks, active authentication, and activity recognition. Before joining MIT, Dr. Fridman worked as a visiting researcher at Google. His research interests include machine learning, decision fusion, developing and applying deep neural networks in the context of driver state sensing, scene perception, and shared control of semi-autonomous vehicles and numerical optimization.