It doesn’t matter if you are beginner or new to machine learning or advanced researcher in the field of deep learning methods and their application, everybody can benefit of Lex Fridman’s course on Deep Learning for Self-Driving Cars.

If you are interested in this course, you can go to http://selfdrivingcars.mit.edu/ and Register an account on the site to stay up-to-date. The material for the course is free and open to the public.

In order to reach more people who are interested in Deep learning, machine learning and artificial intelligence, I would like to share this course material on my website.

This lecture introduces “Deep Learning for Human-Centered Semi-Autonomous Vehicles”.

You can reach slides here..

lecture5

Why Body Pose?

Safety Systems: Seatbelt and Airbag Design

How much time to we spend in the “optimal” crash test dummy position?

Physical Distraction and Incapacitation

“Physical” is a more dramatic manifestation of “mental” inattention

Manual: How much time to be back in physical position to avoid crash?

Semi-automated control: How much time to be back in physical position

Extended Gaze Classification

How often and where does gaze classification break down in naturalistic driving when body is out of position for camera?

Effect of Seatbelts

*Decreased traumatic brain injury (10.4% to 4.1%) •

*Decreased head, face, and neck injury (29.3% to 16.6%) •

*Increased spine injury (17.9% to 35.5%) •

But severity decreased (e.g., fracture from 22% to 4%) •

*Seat belts saved 12,802 lives in 2014.

Biofidelity: “It’s important to have a dummy that acts like a human so that the restraints that you develop have a benefit for the human.“ – Jesse Buehler (Toyota)

Drive State Detection

Challenge: real-world data is “messy”, have to deal with:

*Vibration

*Lighting variation

*Body, head, eye movement

Solution:

*Automated calibration

*Video stabilization (multi-resolutional)

*Face part frontalization

*Phase-based motion magnification

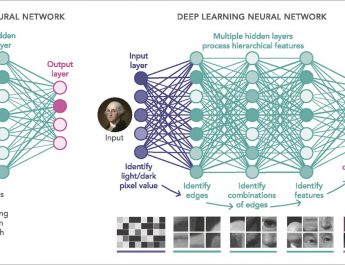

*Use deep neural networks (DNN)

-No feature engineering

-Use raw data

Gaze Classification Pipeline

1. Face detection (the only easy step)

2. Face alignment (active appearance models or deep nets)

3. Eye/pupil detection (are the eyes visible?)

4. Head (and eye) pose estimation (+ normalization)

5. Classification (supervised learning = improves from data)

6. Decision pruning (how confident is the prediction)

Lex Fridman is a Postdoctoral Associate at the MIT AgeLab. He received his BS, MS, and PhD from Drexel University where he worked on applications of machine learning and numerical optimization techniques in a number of fields including robotics, ad hoc wireless networks, active authentication, and activity recognition. Before joining MIT, Dr. Fridman worked as a visiting researcher at Google. His research interests include machine learning, decision fusion, developing and applying deep neural networks in the context of driver state sensing, scene perception, and shared control of semi-autonomous vehicles and numerical optimization.