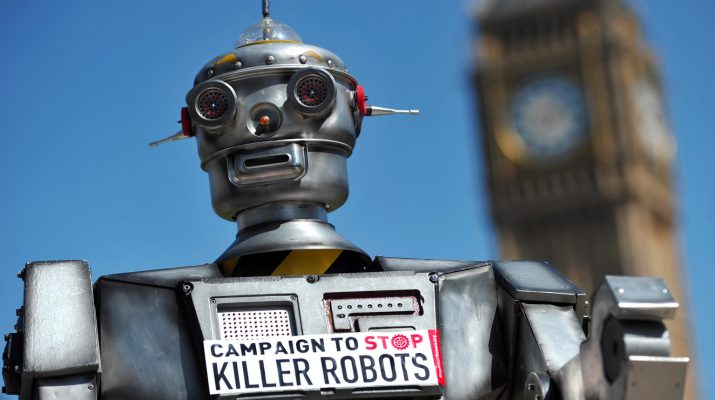

An international consortium of experts are campaigning for a ban on AI weapons. This comes at a conference in Australia where the latest advances in artificial intelligence and its uses are being explored.

Say the words killer robots and Hollywood franchise Terminator may come to mind. But while artificial intelligence experts say that sort of advancement in autonomous lethal weapons is decades off. Other systems are already being developed including Russia’s robot tank BAE Systems long-range autonomous missile bomber and Samsung sentry gun which can fire at will and is already deployed along South Korea’s Demilitarized Zone.

But at a leading artificial intelligence conference being held in Melbourne. Global AI founders have released an open letter calling for a ban on the development and deployment of autonomous lethal weapons.

Professor Toby Walsh authored the Statement.

Professor Toby Walsh, Australian Researcher

“The arms race happening in developing this technology and it’s going to be a revolution. A step change in how we fight war these are going to be weapons of mass destruction. They’re going to be weapons of terror despots and terrorist organisations will get their hands on them. We do have an opportunity not to go down that road.”

The Global Gathering is showcasing positive AI uses as well as advances in the field including the control of a robot arm through brainwaves.

Yaara Bou Melhem

“The robots here beside me and others like them at this conference are the friendly face of AI. A United Nations Convention will meet in November to discuss whether to ban autonomous lethal weapons. But not all participants here are convinced a blanket ban is the best way to go.”

Dr. Virginia Dignum consults for the Dutch military and says positive uses of AI in war need to be explored.

Dr. Virginia Dignum, Dutch Researcher

“Robots will be less led by emotions. If the robot is autonomous, it is not only autonomous to kill but also to take the decision not to kill and the option is some things which we really need much more investigation and the ban will impair that type of investigations.”

Other AI researchers disagree..

Stuart Russel, Bayesian Logic Inc.

“The idea that you would design a machine that can decide to kill a human it’s a pretty simple thing that you’d want to ban.”

The challenge now as with all technologies is to bring AI under ethical and legal control and decide whether that is even possible with autonomous weapons.