Leading Artificial-Intelligence Researchers gathered this week for the prestigious Neural Information Processing Systems conference have a new topic on their agenda. Alongside the usual cutting-edge research, panel discussions, and socializing: concern about AI’s power.

The issue was crystallized in a keynote from Microsoft researcher Kate Crawford Tuesday. The conference, which drew nearly 8,000 researchers to Long Beach, California, is deeply technical, swirling in dense clouds of math and algorithms. Crawford’s good-humored talk featured nary an equation and took the form of an ethical wake-up call. She urged attendees to start considering, and finding ways to mitigate, accidental or intentional harms caused by their creations. “Amongst the very real excitement about what we can do there are also some really concerning problems arising,” Crawford said.

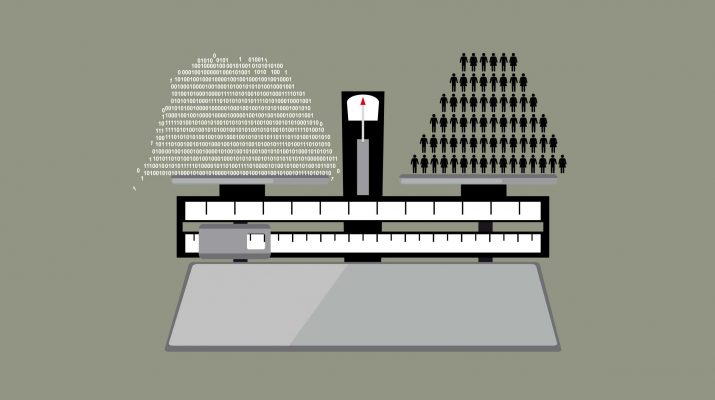

One such problem occurred in 2015, when Google’s photo service labeled some black people as gorillas. More recently, researchers found that image-processing algorithms both learned and amplified gender stereotypes. Crawford told the audience that more troubling errors are surely brewing behind closed doors, as companies and governments adopt machine learning in areas such as criminal justice, and finance. “The common examples I’m sharing today are just the tip of the iceberg,” she said. In addition to her Microsoft role, Crawford is also a cofounder of the AI Now Institute at NYU, which studies social implications of artificial intelligence.

Concern about the potential downsides of more powerful AI is apparent elsewhere at the conference. A tutorial session hosted by Cornell and Berkeley professors in the cavernous main hall Monday focused on building fairness into machine-learning systems, a particular issue as governments increasingly tap AI software. It included a reminder for researchers of legal barriers, such as the Civil Rights and Genetic Information Nondiscrimination acts. One concern is that even when machine-learning systems are programmed to be blind to race or gender, for example, they may use other signals in data such as the location of a person’s home as a proxy for it.

Some researchers are presenting techniques that could constrain or audit AI software. On Thursday, Victoria Krakovna, a researcher from Alphabet’s DeepMind research group, is scheduled to give a talk on “AI safety,” a relatively new strand of work concerned with preventing software developing undesirable or surprising behaviors, such as trying to avoid being switched off. Oxford University researchers planned to host an AI-safety themed lunch discussion earlier in the day.

Krakovna’s talk is part of a one-day workshop dedicated to techniques for peering inside machine-learning systems to understand how they work—making them “interpretable,” in the jargon of the field. Many machine-learning systems are now essentially black boxes; their creators know they work, but can’t explain exactly why they make particular decisions. That will present more problems as startups and large companies such as Google apply machine learning in areas such as hiring and healthcare. “In domains like medicine we can’t have these models just be a black box where something goes in and you get something out but don’t know why,” says Maithra Raghu, a machine-learning researcher at Google. On Monday, she presented open-source software developed with colleagues that can reveal what a machine-learning program is paying attention to in data. It may ultimately allow a doctor to see what part of a scan or patient history led an AI assistant to make a particular diagnosis.

Others in Long Beach hope to make the people building AI better reflect humanity. Like computer science as a whole, machine learning skews towards the white, male, and western. A parallel technical conference called Women in Machine Learning has run alongside NIPS for a decade. This Friday sees the first Black in AI workshop, intended to create a dedicated space for people of color in the field to present their work.

Hanna Wallach, co-chair of NIPS, cofounder of Women in Machine Learning, and a researcher at Microsoft, says those diversity efforts both help individuals, and make AI technology better. “If you have a diversity of perspectives and background you might be more likely to check for bias against different groups,” she says—meaning code that calls black people gorillas would be likely to reach the public. Wallach also points to behavioral research showing that diverse teams consider a broader range of ideas when solving problems.

Ultimately, AI researchers alone can’t and shouldn’t decide how society puts their ideas to use. “A lot of decisions about the future of this field cannot be made in the disciplines in which it began,” says Terah Lyons, executive director of Partnership on AI, a nonprofit launched last year by tech companies to mull the societal impacts of AI. (The organization held a board meeting on the sidelines of NIPS this week.) She says companies, civic-society groups, citizens, and governments all need to engage with the issue.

Yet as the army of corporate recruiters at NIPS from companies ranging from Audi to Target shows, AI researchers’ importance in so many spheres gives them unusual power. Towards the end of her talk Tuesday, Crawford suggested civil disobedience could shape the uses of AI. She talked of French engineer Rene Carmille, who sabotaged tabulating machines used by the Nazis to track French Jews. And she told today’s AI engineers to consider the lines they don’t want their technology to cross. “Are there some things we just shouldn’t build?” she asked.

Tom Simonite

Source: https://www.wired.com/story/artificial-intelligence-seeks-an-ethical-conscience/