Over the last few years, rapid progress in AI has enabled our smartphones, social networks, and search engines to understand our voice, recognize our faces, and identify objects in our photos with very good accuracy. These dramatic improvements are due in large part to the emergence of a new class of machine learning methods known as Deep Learning.

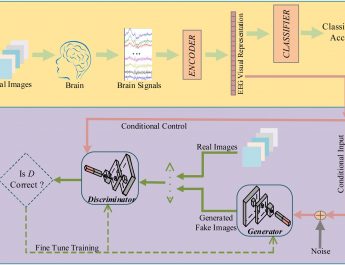

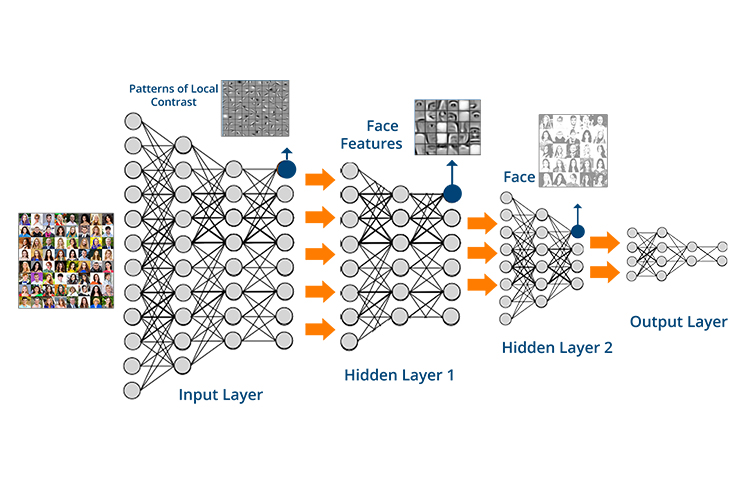

Animals and humans can learn to see, perceive, act, and communicate with an efficiency that no Machine Learning method can approach. The brains of humans and animals are “deep”, in the sense that each action is the result of a long chain of synaptic communications (many layers of processing). We are currently researching efficient learning algorithms for such “deep architectures”. We are currently concentrating on unsupervised learning algorithms that can be used to produce deep hierarchies of features for visual recognition. We surmise that understanding deep learning will not only enable us to build more intelligent machines but will also help us understand human intelligence and the mechanisms of human learning.

http://www.cs.nyu.edu/~yann/research/deep/

A particular type of deep learning system called convolutional network (ConvNet) has been particularly successful for image and speech recognition. ConvNets are a kind of artificial neural network whose architecture is somewhat inspired by that of the visual cortex. What distinguishes ConvNets and other deep learning systems from previous approaches is their ability to learn the entire perception process from end to end. A deep learning system automatically learns appropriate representations of the perceptual world as part of the learning process. A new type of deep learning architectures, memory-augmented networks, goes beyond perception by enabling reasoning, attention, and factual memory.

Deep Learning systems are being deployed in an increasingly large number of applications such as photo and video collection management, content filtering, medical image analysis, face recognition, self-driving cars, robot perception and control, speech recognition, natural language understanding, and language translation.

But we are still quite far from emulating the learning abilities of animals of humans. A key element we are missing is predictive (or unsupervised) learning: the ability of a machine to model the environment, predict possible futures and understand how the world works by observing it and acting in it. This is a very active topic of research at the moment.

https://indico.cern.ch/event/510372/

Over the last few years, rapid progress in AI has enabled our smartphones, social networks, and search engines to understand our voice, recognize our faces, and identify objects in our photos with very good accuracy. These improvements are due in large part to the emergence of a new class of machine learning methods known as Deep Learning. A particular type of deep learning system called convolutional network (ConvNet) has been particularly successful for image and speech recognition.

But we are still quite far from emulating the learning abilities of animals of humans. A key element we are missing is predictive (or unsupervised) learning: the ability of a machine to model the environment, predict possible futures and understand how the world works by observing it and acting in it, a very active topic of research at the moment.

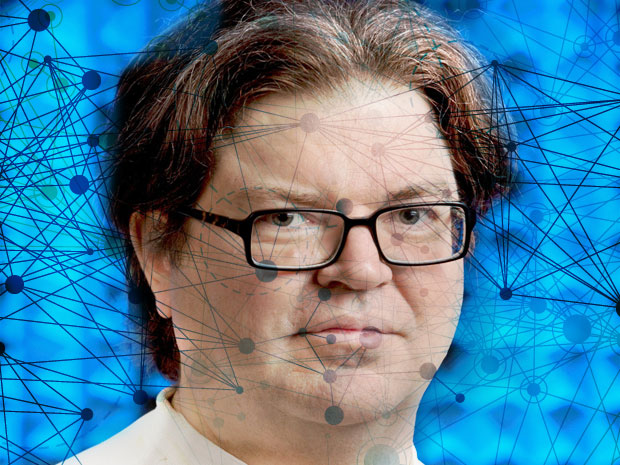

Yann LeCun,

Facebook AI Research & New York University.

Who is Yann LeCun?

Few people have been more closely associated with Deep Learning than Yann LeCun, 54. Working as a Bell Labs researcher during the late 1980s, LeCun developed the convolutional network technique and showed how it could be used to significantly improve handwriting recognition; many of the checks written in the United States are now processed with his approach. Between the mid-1990s and the late 2000s, when neural networks had fallen out of favor, LeCun was one of a handful of scientists who persevered with them. He became a professor at New York University in 2003 and has since spearheaded many other Deep Learning advances.

More recently, Deep Learning and its related fields grew to become one of the most active areas in computer research. Which is one reason that at the end of 2013, LeCun was appointed a head of the newly-created Artificial Intelligence Research Lab at Facebook, though he continues with his NYU duties?

LeCun was born in France, and retains from his native country a sense of the importance of the role of the “public intellectual.” He writes and speaks frequently in his technical areas, of course, but is also not afraid to opine outside his field, including about current events.

http://spectrum.ieee.org/