Google Artificial Intelligence (AI) Chief Scientist Professor Fei-Fei Li, Director at Stanford Artificial Intelligence Lab, talked about “Artificial Intelligence in Medicine” and how it could transform the workflow in healthcare and the way we diagnose, treat and prevent disease.

I actually feel inadequate to be in this audience. I know very little about medicine. So I’m here to learn and interact with you. My talk title is “Guardian Angels: Towards Artificial Intelligence assisted care”..

Let me first acknowledge Prof.Arnold Milstein, M.D. He’s my long-term collaborator in this project or in this collection of projects that I will share with you. And together this is a collaboration between the Stanford engineering school in the Stanford Medical School as well as two centers.. One is clinical excellence research center as well as a interdisciplinary program in Artificial Intelligence assisted care.

This is my grandma. She is actually as of now 95 years old. She lives in a small city in southern China with 12 million people. Between me and some of her children, we call her every day and she is actually still living independently by herself. I am very close to my grandma and she’s very very dear to me and I care a lot about her needs and I know for my grandma in this age. In addition to all, you know family bonds and everything, she cares a lot about quality of life and for her and for her family like someone like me.

* Health

* Independent and Safe

* Quality care

We care about her health. In this age, she has high blood pressure for example. We worry about that. We care about her needs for being independent and safe in her home. She wants to have independent living as long as possible. In the case of when she needs to go to hospitals and so on, we care about the kind of care she receives. The high quality care..

This is really summarizes my motivation of starting to get into medicine and collaborating medicine. I’m starting to think in this age of AI in technology “what can we do to help people in clinicians to help increase improve the quality of care and improve quality of people’s lives”.

In today’s talk, I’m not going to talk too much about medicine. My fellow presenters on this panel will share with you the most awesome and latest work they’re doing. I’m actually gonna turn my attention to more the well-being and the health of both for senior citizens as well as for patients who stay in the hospitals.

So like I said, this is our hypothesis. We actually spent a lot of time talking and thinking about what Artificial Intelligence can help. And we’ve arrived at this very important hypothesis for the work that we’re doing now and I’m going to share with you.

The “guardian angel” hypothesis:

Artificial Intelligence (AI) technology can help better the workflow of healthcare

It’s the Guardian and your hypothesis. Artificial intelligence technology can help better the workflow of healthcare. This is for the rest of the talk, I’ll try to give you some shed on light on how this would work. Here is an example scenario..

This is a recording of a senior living independently in his studio. And during this process of trying to work together on AI assistive care, we talked a lot to clinicians and health workers in senior centers and senior homes to try to learn and understand what are the needs that clinicians as was the people, the elders need in order to assure a high quality independent and safe living..

There is a long list of things that clinicians and family members and the elders themselves worry about or think about and they range from eating patterns, sleep patterns if they fall, if they have movement changes, unstable gestures, early signs of dementia, day and night activity pattern reversals, fluid intake and all this…

The truth is a lot of the clinicians, we talk to, tell us that they don’t really have a good way of finding out. Having a grandma who is 95 years old I really can relate to that we call her every day. We ask her about what she does. But we don’t really know other than that 15 minutes phone call what’s really happening. It’s really hard to tap into the continuous behavior and activities. This was one of the early inspirations of our work and collaboration getting into this space of technology. Because we do a lot of modern computer vision and we have sensors smart sensors like cameras, like depth sensors, like thermal sensors that might help us to getting to help us to monitor or watch like a guardian angel of what’s happening.

That’s really our inspiration and intuition. There is something that computer vision can do to help. As we talk more and more to clinicians, we realize across the board of health care from just living at home all the way to emergency room, to operation room, to intensive care, to hospital ward that need of understanding what’s happening, what are the activities and ensure the quality of care ensure that the patient is safe that need is tremendous.

Hand hygiene is one need that’s across all medical units. Try adding in the emergency department and understanding how the people’s conditions are changing the waiting room is another big concern or protocols in the intensive care unit. Are we really following the central line insertion protocols how is the patient’s condition? All this seem to be areas that could benefit from better and continuous observation.

Critical needs: Track, understand and (when needed) modify human behaviors

* Patients: RASS, pain level, mobility, vitals, etc.

* Clinicians: hand hygiene, bundle protocols, patient interactions, operation procedures..

Current methods: Sparse, labor-intensive, erroneous, subjective, costly..

AI-assisted Care: real-time, continuous, accurate, objective, cost-effective,..

We start to realize that there is a critical need in health care environment where we could track, understand and when need it modify human behaviors. So for patients, we want to understand if they have their pain level, their mobility, the vitals, their living conditions for clinicians, hand hygiene, on the protocol’s, patient interactions, operation procedures, and the list is just on and on.. With these needs in mind, we look at what’s the current situation and what’s the current method?

The truth is that there isn’t much of a current method. There is a lot of procedures and protocols designed to use people, to watch people. And that’s extremelIy expensive. It’s sparse just like I call my grandma and most for 10 minutes 15 minutes per day. I don’t get to know the 24-7 behavior. It’s erroneous.. Thinking about one clinicians assessment of the patient’s mobility level is could be very different from another clinicians assessment. It’s subjective and it’s really costly..

Our hypothesis says Artificial Intelligence might be able to help here. Artificial Intelligence as a technology, that’s becoming more more mature and capable. It can be real time, can be continuous.. It’s accurate if we have, if we train the models well.. It’s relatively objective and it’s cost effective and this is very much on our mind when we think about improving health care as well as keeping the cost down.

That’s the big picturing.. In fact, the most important picture slide of this talk is this one.. Then, the rest of the talk, I’ll show you a little bit of the basic technology that we are using to understand the environment as well as the human behavior. But also just quickly show you a couple of projects that’s going on..

Computer Vision:

To see and to understand the world

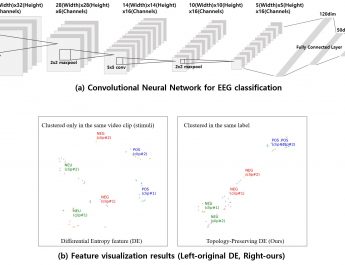

We’re gonna use how computer vision technology to see and understand the visual world. Much of today’s computer vision technology relies on this technique called deep learning which is a neural network architecture to learn to observe and understand the world for example to recognize cats since they’re everywhere..

To detect objects can be quite cluttered in the hospital room you can imagine or in a studio there are a lot of objects we can detect many of the people, the environment, the background objects. We can even use this machine learning deep learning technology to communicate with a person who might not be there at the scene by generating sentences like this one, or sentences like this one, this is a machine generated sentence of the thing.

We can actually get into the more details of a visual thing and talk about specific areas think about a senior person who is working the kitchen. We can focus on what she is doing with the cooking and the objects she’s using. Is she cooking lots of meat or taking fruits and and so on? So these are just examples..

We can also work with video domain by looking at different behaviors. For example, this is a YouTube video showing what kind of.. just look at the first line of the label what kind of sports game that people are doing. This is a another work we’re doing that shows how to zooming two different people in the individual thing you can think. This could be useful in a hospital situation and looking and understand what their individual behavior is..

This is a particular work we’re doing in the hospital to track people without revealing their identity if privacy is a concern we use only in depth sensor to track people and understand their behavior. This is another project we’re doing in actually real world European train station of tracking many many people and look at their behavior which might be useful in the busy hospital or in emergency department.

Those were the basic technology that we’re developing our lab to understand and see the world. What we are applying them for is one project we’re doing is with the Lucile Packard Children’s Hospital of hand hygiene in hospitals and people here. Hand hygiene is a huge important issue in healthcare and it’s a cost billions and billions of dollars in hospitals and governments to combat bad hand hygiene which were resulting to hospital acquired infection..

What is the problem?

HAI: Hospital acquired infections

* New England Journal of Medicine, March 2014:

–1 in 25 patients with HCAIs

* CDC report 2007

– Cost of HCAI : $35.7 to $45 billion

* Hand Hygiene (HH) is a leading driver of HAI

We have worked with one hospital unit in the Lucile Packard Children’s Hospital to put depth sensors everywhere; inside and outside of the patient room to cover almost all areas and to monitor, to begin to monitor how clinicians behave in terms of hand hygiene.

We hope that by looking at this we can provide much better assessment of head hygiene behavior and solutions.

The ICU

Highest mortality unit in any hospital (8-19%)

Highest complexity of care

-Nurse ratio 1:1 or 1:2

-20% of patients experience an adverse event

*Avg increase in hospital stay=31 days

*50% are preventable

Another project is a Smart ICU Project. In ICU’s, this is the most high mortality unit. We’re hoping to help to use the modern sensors. This is to help doctors and clinicians to assess patients situation such as sedation level, paying mobility and also to help automatically document nurse activities to reduce the burden of documentation

I’m not going to get into the last one which is Senior Independent Living which is very dear to my heart and we’re collaborating this with a Senior Center in San Francisco to unlock to assess the living situation of seniors and help clinicians to make better assessment of what’s their condition.

So there’s a lot to be done in Artificial Intelligence Assisted Healthcare. I think that this is really a new exciting area that we’re just starting to crack open the doors. I really welcome more people working these. Personally I’m very grateful that I’m working with really brilliant students and postdocs at Stanford to make this happen…