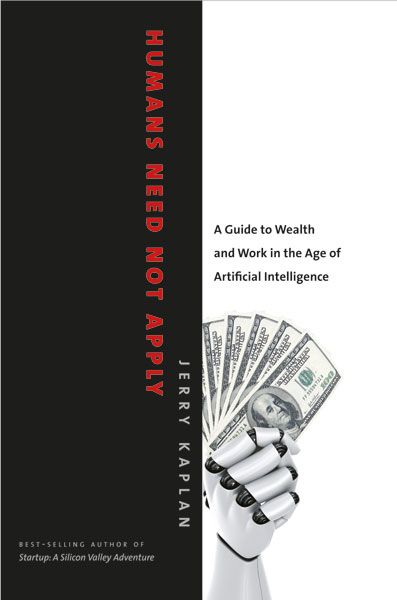

Jerry Kaplan‘s latest book is “Humans Need Not Apply: A Guide to Wealth and Work in the Age of Artificial Intelligence”

Selected as one of the 10 best science and technology books of 2015 by The Economist, Humans Need Not Apply is a call to arms for the age of artificially intelligent machines. The robots are coming, but whether they will be working on behalf of society or a small cadre of the super-rich is very much in doubt.

Without adjustments to our economic system and regulatory policies, entrepreneur and technical innovator, Jerry Kaplan argues we may be in for an extended period of social turmoil. Widespread poverty against a backdrop of escalating comfort and wealth is not just the stuff of science fiction dystopias, but a very real risk for our future.

Kaplan proposes innovative, free-market adjustments to our economic system and social policies to avoid an extended period of social turmoil, delivering a timely and accessible analysis of the promise and perils of artificial intelligence.

(http://www.oxfordmartin.ox.ac.uk)

About the Book:

After billions of dollars and fifty years of effort, researchers are finally cracking the code on artificial intelligence. As society stands on the cusp of unprecedented change, Jerry Kaplan unpacks the latest advances in robotics, machine learning, and perception powering systems that rival or exceed human capabilities. Driverless cars, robotic helpers, and intelligent agents that promote our interests have the potential to usher in a new age of affluence and leisure – but as Kaplan warns, the transition may be protracted and brutal unless we address the two great scourges of the modern developed world: volatile labor markets and income inequality.

He proposes innovative, free-market adjustments to our economic system and social policies to avoid an extended period of social turmoil. His timely and accessible analysis of the promise and perils of artificial intelligence is a must-read for business leaders and policy makers on both sides of the aisle.

Jerry Kaplan:

“Everybody’s concerned about killer robots. We should ban them. We shouldn’t do any research into them. It may be unethical to do so. There’s a wonderful paper in fact by a professor at the post naval graduate school in Monterrey I believe, B.J. Strawser. I believe the title is the moral requirement to deploy autonomous drones. And his basic point in that is really pretty straightforward. We have obligations to our military forces to protect them and things that we can do which may protect them. A failure to do that is itself an ethical decision which may cause – may be the wrong thing to do if you have technologies.”

“So let me give you an interesting scale that whole thing down to show you this doesn’t have to be about terminator like robots coming in and shooting at people and things like that. Think about a landmine. Now a landmine has a sensor, a little switch. You step on it and it blows up. There’s a sensor, there’s an action that’s taken as a result of a change in its environment. Now it’s a fairly straightforward matter to take some artificial intelligence technologies right off the shelf today and just put a little camera on that. It’s not expensive, same kind you have in your cell phone. There’s a little bit of processing power that could look at what’s actually happening around that landmine. And you might think well okay, I can see that the person who is nearby me is carrying a gun. I can see that they’re wearing a military uniform so I’m going to blow up. But if you see it’s just some peasant out in a field with a rake or a hoe we can avoid blowing up under the circumstances. Oh, that’s a child. I don’t want to blow up. I’m begin stepped on by an animal. Okay, I’m not going to blow up. Now that is an autonomous military technology of just the sort that there was a recent letter signed by a great many scientists. This falls into that class.”

“And in the emerging that devices like that be banned. But I give this as an example of the device for which there’s a good argument that if we can’t deploy that technology it’s more humane, it’s more targeted and it’s more ethical to do so. Now that isn’t always the case. My point is not that that’s right and you should just go ahead willy nilly and develop killer robots. My point is this is a much more subtle area which requires considerable more thought and research. And we should let the people who are working on it think through these problems and make sure that they understand the kinds of sensitivities and concerns that we have as a society about the use and deployment of these types of technologies.”

Who is Jerry Kaplan?

He is widely known in the computer industry as a serial entrepreneur, technical innovator, and best-selling author. He is currently a Fellow at the Center for Legal Informatics at Stanford University and teaches ethics and impact of artificial intelligence in the Computer Science Department.

Kaplan received a Bachelor’s degree in history and philosophy of science from the University of Chicago (1972), a Doctorate degree in computer and information science from the University of Pennsylvania (1979), and was a research associate in Computer Science at Stanford from 1979 to 1981.

Jerry Kaplan: “Humans Need Not Apply” | Talks at Google

The common wisdom about Artificial Intelligence is that we are building increasingly intelligent machines that will ultimately surpass human capabilities, steal our jobs, possibly even escape human control and take over the world. Jerry Kaplan, author of Humans Need Not Apply: A Guide to Wealth and Work in the age of Artificial intelligence, share with us why his narrative is both misguided and counterproductive. Hal Varian also shares his thoughts in the Q&A.

In his talk, Jerry Kaplan addresses the commonly misplaced fears and misconceptions around Artificial Intelligence. A more appropriate framing–better supported by actual events and current trends—is that AI is simply a natural expansion of longstanding efforts to automate tasks, dating back at least to the start of the industrial revolution. Stripping the field of its gee-whiz apocalyptic gloss makes it easier to predict the likely benefits and pitfalls of this important technology. AI has the potential to usher in a new age of affluence and leisure, but it’s likely to roil labor markets and increase inequality unless we address these pressing societal problems. The robots are certainly coming, but whether they will benefit society as a whole or serve the needs of the few is very much in doubt. Join me for an unorthodox tour of the history of Artificial Intelligence, learn why it is so misunderstood, and what we can do to ensure that the engines of progress don’t motor on without us.

Jerry Kaplan is widely known as a serial entrepreneur, technical innovator, bestselling author, and futurist. He co-founded four Silicon Valley startups, two of which became publicly traded companies. His best-selling non-fiction novel “Startup: A Silicon Valley Adventure” was selected by Business Week as one of the top ten business books of the year. His latest book, “Humans Need Not Apply: A Guide to Wealth and Work in the Age of Artificial Intelligence”, was published August, 2015 by Yale University Press. Mr. Kaplan is currently a Fellow at the Stanford Center for Legal Informatics, and teaches ethics and impact of Artificial Intelligence in the Computer Science Department. He holds a BA from the University of Chicago in History and Philosophy of Science, and a PhD in Computer Science from the University of Pennsylvania.

#1 fear is just a word) I will be at the bookstore. Love to rest my eyes on paper when I absorb data & intelligence. ( back to my point) I’m purchasing a copy as an investment in my own future! Its challenging to be one step ahead of the other guy,0 knowledge & a game plan are key. If I had a AI personal robotic, I’d feel better equipt for the 21st century and beyond… Sky’s not the limit anymore!

Thanks for posting this informative article!