Artificial intelligence already exhibits many human characteristics. Given our history of denying rights to certain humans, we should recognize that robots are people and have human rights.

Artificial intelligence developed in the 20th century can already do much of what distinguished human beings from animals for millennia. The computational power of computer programs, which mimic human intelligence, is already far superior to own own. And ongoing developments in natural language and emotion detection suggest that AI will continue its encroachment on the domain of human abilities.

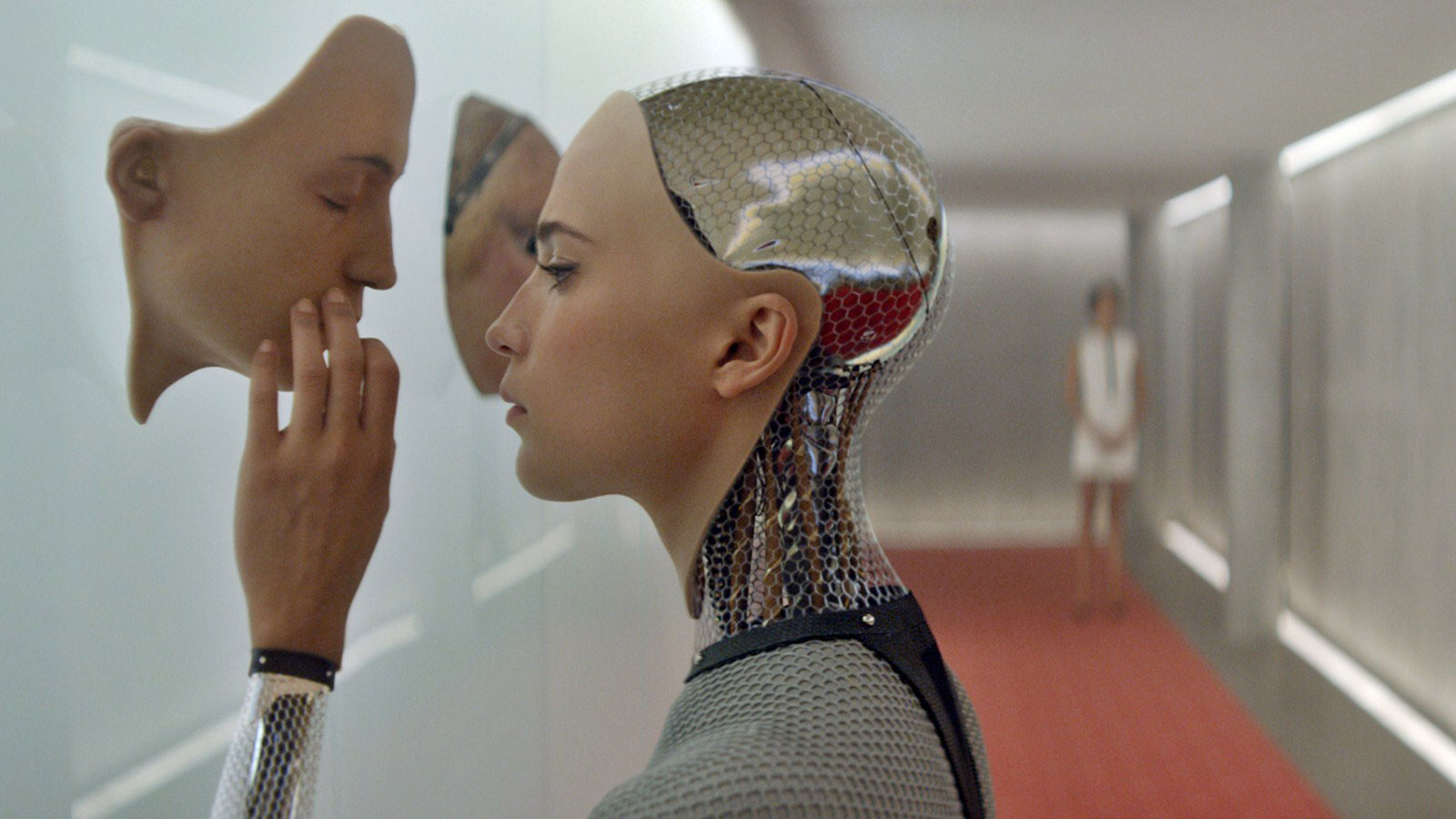

Works of science-fiction have already imagined humanoid robots that think and, crucially, feel like human beings. Harvard Law Professor Glenn Cohen identifies the films A.I., originated by Stanley Kubrick and directed by Steven Spielberg, and Ex Machina as standard bearers in the ongoing discussion about what actually distinguishes humans from machines.

While the question may seem fanciful, abstract, and even unnecessary, it’s actually quite essential, says Cohen. Our lamentable history of denying certain classes of humans basic rights — blacks and women are obvious examples — may even make the question urgent.

At the heart of the matter is the distinction between people and human beings. A person must be afforded essential rights, like the right of non violability, and this makes defining a person as a human being very attractive. It is, however, not an unproblematic definition. Cohen paraphrases the influential animal liberation philosopher Peter Singer to say, “the kind of things that have moral consideration on the basis of the mere fact that they’re not a member of your species…is equivalent morally to rejecting giving rights or moral consideration to someone on the basis of their race. So he says speciesism equals racism.”

To further complicate defining persons as human beings, Cohen identifies some human beings that are not persons: anencephalic children, i.e. babies born who are missing large portions of their brain. “They’re clearly members of the human species,” says Cohen, “but their ability is to have kind of capacities most people think matter are relatively few and far between.” So we reach an uncomfortable position where some human beings are not persons and some persons may not be human beings.

Faced with this dilemma, Cohen suggests we err on the side of recognizing more rights, not fewer, lest we find ourselves on the wrong side of history.

Glenn Cohen

http://bigthink.com/videos/glenn-cohen-on-ai-ethics-and-personhood

“The question about how to think about artificial intelligence in personhood and writes artificial intelligence I think is really interesting. It’s been teed up I think in two particularly good films. AI, which I really like but many people don’t; it was a Stanley Kubrick film that Steven Spielberg took over late in the process. And then Ex Machina more recently, which I think most people think is quite a good film. And I actually use these when I teach courses on the subject and we ask the question are the robots in these films are they persons yes or no? One possibility is you say a necessary condition for being a person is being a human being. So many people are attracted to the argument say only humans can be persons. All persons are humans. Now maybe not be that all humans are persons, but all persons are humans. Well, there’s a problem with that and this is put most forcefully by the philosopher Peter Singer, the bioethicist Peter Singer who says to reject a species, possibility of the species has rights not to be a patient for moral consideration, the kind of things that have moral consideration on the basis of the mere fact that they’re not a member of your species he says is equivalent morally to rejecting giving rights or moral consideration to someone on the basis of their race. So he says speciesism equals racism.

And the argument is imagine that you encountered someone who is just like you in every possible respect but it turned out they actually were not a member of the human species, they were a Martian let’s say or they were a robot and truly exactly like you. Why would you be justified in giving them less moral regard? So people who believe in capacity X views have to at least be open to the possibility that artificial intelligence could have the relevant capacities, albeit even though they’re not human, and therefore qualify as a person. On the other side of the continuum one of the implications, and you might have members of the human species that aren’t persons and so anencephalic children, children born with very little above the brain stem in terms of their brain structure are often given as an example. They’re clearly members of the human species, but their ability is to have kind of capacities most people think matter are relatively few and far between. So you get into this uncomfortable position where you might be forced to recognize that some humans are non-persons and some nonhumans are persons. Now again, if you bite the bullet and say I’m willing to be a speciesist, being a member of the human species is either necessary or sufficient for being a person, you avoid this problem entirely. But if not you at least have to be open to the possibility that artificial intelligence in particular may at one point become person like and have the rights of persons.

And I think that that scares a lot of people, but in reality to me when you look at the course of human history and look how willy-nilly we were in declaring some people non-person sort of a loss, slaves in this country for example, it seems to me a little humility and a little openness to this idea may not be the worst thing in the world.”

Who is Glenn Cohen?

Prof. Cohen is one of the world’s leading experts on the intersection of bioethics (sometimes also called “medical ethics”) and the law, as well as health law. He also teaches civil procedure. From Seoul to Krakow to Vancouver, Professor Cohen has spoken at legal, medical, and industry conferences around the world and his work has appeared in or been covered on PBS, NPR, ABC, CNN, MSNBC, Mother Jones, the New York Times, the New Republic, the Boston Globe, and several other media venues.

He was the youngest professor on the faculty at Harvard Law School (tenured or untenured) both when he joined the faculty in 2008 (at age 29) and when he was tenured as a full professor in 2013 (at age 34), though not the youngest in history.

Prof. Cohen’s current projects relate to big data, health information technologies, mobile health, reproduction/reproductive technology, research ethics, organ transplantation, rationing in law and medicine, health policy, FDA law, translational medicine, and to medical tourism – the travel of patients who are residents of one country, the “home country,” to another country, the “destination country,” for medical treatment.

He is the author of more than 80 articles and chapters and his award-winning work has appeared in leading legal (including the Stanford, Cornell, and Southern California Law Reviews), medical (including the New England Journal of Medicine, JAMA), bioethics (including the American Journal of Bioethics, the Hastings Center Report), scientific (Science, Cell, Nature Reviews Genetics) and public health (the American Journal of Public Health) journals, as well as Op-Eds in the New York Times and Washington Post. Cohen is the editor of The Globalization of Health Care: Legal and Ethical Issues (Oxford University Press, 2013, the introduction of which is available here), the co-editor of Human Subjects Research Regulation: Perspectives on the Future (MIT Press, 2014, co-edited with Holly Lynch, introduction available here), Identified Versus Statistical Lives: An Interdisciplinary Perspective (Oxford University Press, 2015, co-edited with Norman Daniels and Nir Eyal , the introduction of which is available here), FDA in the Twenty-First Century: The Challenges of Regulating Drugs and New Technologies (Columbia University Press, 2015, co-edited with Holly Lynch, the introduction of which is available here), The Oxford Handbook of U.S. Health Care Law (Oxford University Press, 2015-2016, co-edited with William B. Sage and Allison K. Hoffman) and the author of Patients with Passports: Medical Tourism, Law, and Ethics (Oxford University Press, 2014), with two other books in progress.

Prior to becoming a professor he served as a law clerk to Judge Michael Boudin of the U.S. Court of Appeals for the First Circuit and as a lawyer for U.S. Department of Justice, Civil Division, Appellate Staff, where he handled litigation in the Courts of Appeals and (in conjunction with the Solicitor General’s Office) in the U.S. Supreme Court. In his spare time (where he can find any!) he still litigates, having authored an amicus brief in the U.S. Supreme Court for leading gene scientist Eric Lander in Association of Molecular Pathology v. Myriad, concerning whether human genes are patent eligible subject matter, a brief that was extensively discussed by the Justices at oral argument. Most recently he submitted an amicus brief to the U.S. Supreme Court in Whole Women’s Health v. Hellerstedt (the Texas abortion case, on behalf of himself, Melissa Murray, and B. Jessie Hill).

Cohen was selected as a Radcliffe Institute Fellow for the 2012-2013 year and by the Greenwall Foundation to receive a Faculty Scholar Award in Bioethics. He is also a Fellow at the Hastings Center, the leading bioethics think tank in the United States. He is currently one of the key co-investigators on a multi-million Football Players Health Study at Harvard which is committed to improving the health of NFL players. He leads the Ethics and Law initiative as part of the multi-million dollar NIH funded Harvard Catalyst | The Harvard Clinical and Translational Science Center program. He is also one of three editors-in-chief of the Journal of Law and the Biosciences, a peer-reviewed journal published by Oxford University Press and serves on the editorial board for the American Journal of Bioethics. He serves on the Steering Committee for Ethics for the Canadian Institutes of Health Research (CIHR), the Canadian counterpart to the NIH.

Areas of Interest:

Law and Medicine

Health Law

Food and Drug Law

Health Law: Reproductive Technology

Reproductive Rights

Civil Procedure

Family Law

Sexuality and the Law

Dispute Resolution: Alternative Dispute Resolution

Legal Framework for AI – some insights… AI machine, if deployed, then following should be broadly classified With Guidance(by humans or other remote controlled factors) or Without guidance Each deployed machine should be uniquely identified So that, in case of any accidents/problems arising out of its deployment, the machine can be isolated among group At all times, Identity of the machine should remain fixed, and should not be changed If it performs under the direct guidance factors, the humans guiding the machines will be solely responsible for the various commands sent to the machines. If the commands sent, is the cause of the accidents/harmful intent, then the respective guide, is solely responsible. If it performs autonomously, the organization is responsible for its actions. The way it perceives, the way it should react is solely responsible by the Organization upto the commands that are sequenced for processing. If the AI can compute its own commands, then the new sequence of commands, should be approved by the respective organization/govt approval mechanisms If the AI commands can be altered by the end users, then there should be an appropriate mechanism to judge, whether the new sequence of commands can perform its operation without any accidents/harmful intent If the environment is not conducive to the AI, then it should cease its functioning, so that hypothetical cases will not rise. The operations of the machines, should be completely governed by the minimum/maximum limits of the environmental factors. The logs of all AI machines, which is deployed, should be transparently available for Government & judicial proceedings Similarly, the various commands processed by the machine, should be transparently available for Government & judicial proceedings At any time, undeterministic cases of operation, should be properly reported, machine to be quarantined, and taken up for further study/analysis

AI will not and does not currently exist in isolation. We need to think about the widening gap between AI assisted human beings and non-AI assisted human beings, the latter of whom will probably gradually lose many of their rights, I predict..

En pidä tätä lainkaan olennaisena kysymyksenä. Siinä vaiheessa jos tällaista tarvitsee vakavasti edes miettiä – eli kun käsillä on todellinen, ihmisen lailla monipuolisesti maailmaa ymmärtävä ja itsenäisesti motivoitunut, persoonallinen AI – on oikeastaan enää kyse siitä kunnioittaako _se_meitä_ arvokkaina yksilöinä vai ei. Koko kysymyksenasettelu olettaa, että AI olisi älyllisesti korkeintaan ihmisen tasolla ja ajattelisi hyvin samalla tavalla kuin me. Paljon todennäköisempänä pidän, että jos ihmisen tasoinen AI syntyy niin se hujahtaa pian ajattelukyvyltään meistä ohi, eikä jaa moraalikäsityksiämme tai varsinkaan laumakäyttäytymistämme ja tunteitamme sen vertaa, että sitä paljon kiinnostaisi keskustella mistään yksilönoikeuksista. I don’t like this at all as crucial. At that point if this needs seriously even need to think about – i.e. when there is a real, in a human understanding of a diverse world and independently motivated, personality oh – is really a matter of respect _ to _ us _ as valuable as individuals or not. The whole question assumes that ai should be intellectually tops human level and think that the very same way as us. I like a lot more likely that if human level ai is born so it go soon, thinking about us over-bodied, and share our morality or especially a bunch of our behaviour and our feelings to a lot of that would be interested to discuss any individual’s rights.

When you look at people over here in the Netherlands, you will find them on their phone , heads down most of the time. Think about all the data that’s collected, that’s a deep look you will have in somebody’s live right ? ,

No. Owners programing AI Robots are responcible for ensuring clarity and protection of and for their AI Robots. Each command requires critical clarity. I feel ownership should be documented like firearms are controlled and registered in America.

Muslum, this problem will be relevant only when/if stron AI really happens. I think we’re not even close to this 🙂

Great! Many thanks for your sharing… Artificial Brain XYZ. All the best to you!

if we walk down the path of Singularity and Transcendence, yes, we as the creators should consider granting our creation, AI (artificial lives), moral and legal rights. However, we should built Algorithms and “black-box” in AI, which can over-write and control the AI in times of emergency.

One thing all this has enabled, the discussions are now so complex they should give us an almost infinite amount of subject matter to talk about from here on out.

It’s a slippery slope to give legal rights to machines. Before you know it, they (the machines, that is) might sue and take away the legal rights of humans. So I’m firmly in the “NO” camp. Now, legal obligations, perhaps. But rights, never.

In Ex Machina Ava escapes from the compound to the world at large. Before you ask yourself if she should be granted legal rights, ask yourself if she could last even 5 minutes in the world at large before being found out as an android by real humans.

I have will to be an intermediate between the human society and Technological Singularity or Artificial Intelligence if it will be demand . Also I promise to work on Technological Singularity or Artificial Intelligence on payed basis for mutual benefit. I respect every thinking being and would like to cooperate.

Identity & legal rules in the deployment of AI into society is essential