A revolution in AI is occurring thanks to progress in deep learning. How far are we towards the goal of achieving human-level AI? What are some of the main challenges ahead?

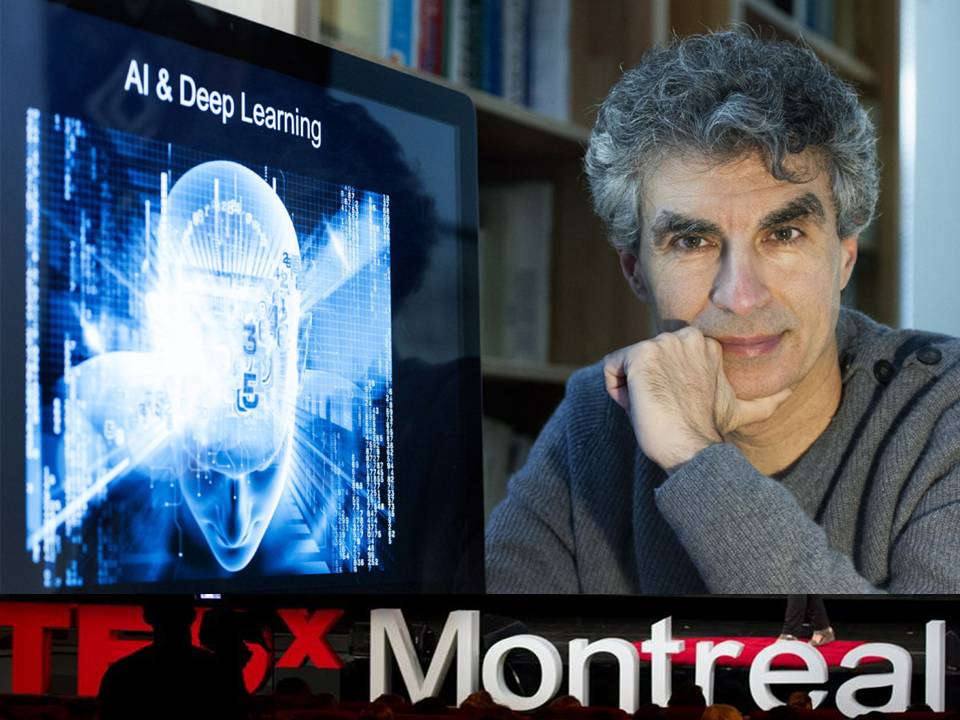

Yoshua Bengio believes that understanding the basics of AI is within every citizen’s reach. That democratizing these issues is important so that our societies can make the best collective decisions regarding the major changes AI will bring, thus making these changes beneficial and advantageous for all.

“Our world is changing in many ways. And one of the things which are going to have a huge impact on our future is Artificial Intelligence.. AI is bringing another Industrial Revolution.

Previous industrial revolutions expended humans’ mechanical power. This new revolution this second Machine Age is going to expand our cognitive abilities our mental power. Computers are not just going to replace manual labor but also mental labor. So where do we stand today?

You may have heard about what happened last March when a machine learning system called AlphaGo used deep learning to beat the world champion at the game of “Go”. “Go” is an ancient Chinese game that had been much more difficult for computers to master than the game of chess. How did we succeed now after decades of AI research?

AlphaGo was trained to play “Go”. first by watching over and over tens of millions of moves made by very strong human players then by playing against itself millions of games.

Machine learning allows computers to learn from examples to learn from data. Machine learning has turned out to be a key to cram knowledge into computers. And this is important. Because knowledge is what enables intelligence.

Putting knowledge into computers had been a challenge for previous approaches to AI. Why?

There are many things which we know intuitively. So we cannot communicate them verbally. We do not have conscious access to that intuitive knowledge. How can we program computers with that knowledge? What’s the solution?

The solution is for machines to learn that knowledge by themselves just as we do. This is important because knowledge is what enables intelligence. My mission has been to contribute to discover and understand the principles of intelligence through learning whether animal or human or machine learning.

I and others believe that there are a few key principles just like the laws of physics.. Simple principles that could explain our own intelligence and help us build intelligent machines. For example, think about the laws of aerodynamics which are general enough to explain the flight of both birds and planes. Wouldn’t it be amazing to discover such simple but powerful principles that would explain intelligence itself?

Well, we’ve made some progress.. My collaborators and me have contributed in recent years in a revolution in AI with our research on neural networks and deep learning an approach to machine learning which is inspired by the brain.

It started with speech recognition on your phones with neural networks since 2012. Shortly after came a breakthrough in computer vision. Computers can now do a pretty good job of recognizing the content of images. In fact, approaching human performance on some benchmarks over the last five years, a computer can now get an intuitive understanding of the visual appearance of a GO board that is comparable to that of the best human players.

More recently following some discoveries made in my lab, deep learning has been used to translate from one language to another and you know start seeing this in Google Translate. This is expanding the computer’s ability to understand and generate natural language.

But don’t be fooled! We are still very very far from a machine that would be as able as humans to learn to master many aspects of our world. So let’s take an example.. Even a two-year-old child is able to learn things in a way that computers are not able to do right now. A two-year-old child actually masters intuitive physics. She knows that when she drops a ball that it is going to fall down. When she spilled some liquids, she expects the resulting mess. Her parents do not need to teach her about Newton’s laws or differential equations. She discovers all these things by herself in an unsupervised way.

Own supervised learning actually remains one of the key challenges for AI. It may take several more decades of fundamental research to crack that not. Unsupervised learning is actually trying to discover representations of the data.

Let me show you an example. Consider a page on the screen that you’re seeing with your eyes or that the computer is seeing as an image a bunch of pixels. In order to answer a question about the content of the image, you need to understand its high-level meaning. This high-level meaning corresponds to the highest level of representation in your brain.

Lower down you have the individual meaning of words and even lower down you have characters which make up the words. Those characters could be rendered in different ways with different strokes that make up the characters. Those strokes are made up of edges and those edges are made up of pixels. So these are different levels of representation.

But the pixels are not sufficient by themselves to make sense of the image to answer a high-level question about the content of the page. Your brain actually has these different levels of representation starting with neurons in the first visual area of cortex v1 which recognize edges and then neurons in the second visual area of cortex v2 which recognize strokes and small shapes. Higher up you have neurons that detect parts of objects and then objects and full scenes.

Mule networks when they are trained with images can actually discover these types of levels of representation that match pretty well what we observe in the brain. Both biological neural networks which are what you have in your brain and the deep neural networks that we train on our machines can learn to transform from one level of representation to the next with the higher levels corresponding to more abstract notions.

For example, the abstract notion of the character “A” can be rendered in many different ways at the lowest levels as many different configurations of pixels depending on your position, rotation, font, and so on.

So how do we learn these high levels of representations? One thing that has been very successful up to now in the applications of deep learning is what we call Supervised Learning. With Supervised Learning, The computer needs to be taken by the hand and humans have to tell the computer the answer to many questions.

For example, on millions and millions of images humans have to tell the Machine: Well for this image it is a cat, for this image it is a dog, for this image it is a laptop, for this image is the keyboard, and so on and so on.. millions of times…

This is very painful and we use crowdsourcing to manage to do that. Although this is very powerful and we are already able to solve very interesting problems, humans are much stronger and they can learn over many more different aspects of the world in a much more autonomous way just as we’ve seen with the two-year-old child learning about intuitive physics.

Unsupervised learning could also help us deal with self-driving cars. Let me explain what I mean.. Unsupervised learning allows computers to project themselves into the future to generate plausible futures conditioned on the current situation.

That allows computers to reason and to plan ahead even for circumstances that they have not been trained on. This is important. Because if we use supervised learning, we would have to tell the computers about all the circumstances where the car could be and how humans would react in that situation. How did I learn to avoid dangerous fighting behavior? Did I have to die a thousand times in an accident? Well.. That’s the way we’re trying machines right now. So it’s not going to fly or at least not to drive.

What we need is to train our models to be able to generate plausible images, plausible futures be creative. We’re making progress with that.

We are training these deep neural networks to go from high-level meaning to pixels rather than from pixels to high-level of meaning going in the other direction through the levels of representation. In this way the computer can generate images there are new images different from what the computer has seen while it was trained but are plausible that looked like natural images.

We can also use these models to dream up strange, sometimes scary images just like our dreams and nightmares.

Here are some images that were synthesized by the computer using these deep genitive models they look like natural images but if you look closely you’ll see there are different and they’re still missing some of the important details that we would recognize as natural.

About ten years ago, unsupervised learning has been a key to the breakthrough that we obtained discovering deep learning. This was happening in just a few labs including mine at a time when neural networks were not popular; they were almost abandoned by the scientific community.

Now things have changed a lot. It has become a very hot field. There are now hundreds of students every year applying for graduate studies at my lab with my collaborators.

Montreal has become the largest academic concentration of deep learning researchers in the world. We just received a huge research grant of 94 million dollars to push the boundaries of AI and data science and also to transfer technology of deep learning and data science to industry. Business people stimulated by all this are creating startups, industrial labs many of which near the universities. For example, just a few weeks ago, we announced the launch of a startup factory called Element AI which is going to focus on deep learning applications.

There is just not enough deep learning experts. So they’re getting paid crazy salaries and many of my former academic colleagues have accepted generous deals from companies to work in industrial labs.

I for myself have chosen to stay in university to work for the public good, to work with students to remain independent, to guide the next generation of deep learning experts. One thing that we’re doing beyond commercial value is thinking about the social implications of AI.

Many of us are now starting to turn our eyes towards social value-added applications like health. We think that we can use deep learning to improve treatment with personalized medicine. I believe that in the future as we collect more data from millions and billions of people around the earth, we’ll be able to provide medical advice to billions of people who don’t have access to it right now.

We can imagine many other applications for the social value of AI. For example, something that will come out of our research on natural language understanding is providing all kinds of services like legal services to those who can’t afford them.

We are now turning our eyes also towards the social implications of AI in my community. But it’s not just for experts to think about this. I believe that beyond the math and the jargon ordinary people can get a sense of what goes on under the hood enough to participate in the important decisions that will take place in the next few years and decades about AI.

So please set aside your fees and give yourself some space to learn about it. My collaborators and I have written several introductory papers and a book entitled deep learning to help students and engineers jump into this exciting field.

There are also many online resources, software, tutorials, videos. Many undergraduate students are learning a lot of this about research and deep learning by themselves to later join the ranks of labs like mine.

AI is going to have a profound impact on our society. So, it’s important to ask how are we going to use it. Immense positives may come along with negatives such as military use or rapid disruptive changes in the job market. To make sure the collective choices that will be made about AI in the next few years will be for the benefit of all, every citizen should take an active role in defining how AI will shape our future.”

Who is Yoshua Bengio?

Yoshua Bengio is a Full Professor of the Department of Computer Science and Operations Research, head of the Montreal Institute for Learning Algorithms (MILA), CIFAR Program co-director of the CIFAR program on Learning in Machines and Brains, Canada Research Chair in Statistical Learning Algorithms. His main research ambition is to understand the principles of learning that yield intelligence. He teaches a graduate course in Machine Learning (IFT6266) and supervises a large group of graduate students and post-docs. His research is widely cited (over 65000 citations found by Google Scholar in April 2017, with an H-index of 95).

Yoshua Bengio is currently an action editor for the Journal of Machine Learning Research, associate editor for the Neural Computation journal, editor for Foundations and Trends in Machine Learning, and has been an associate editor for the Machine Learning Journal and the IEEE Transactions on Neural Networks.

Yoshua Bengio was Program Chair for NIPS’2008 and General Chair for NIPS’2009 (NIPS is the flagship conference in the areas of learning algorithms and neural computation). Since 1999, he has been co-organizing the Learning Workshop with Yann Le Cun, with whom he has also created the International Conference on Representation Learning (ICLR). He has also organized or co-organized numerous other events, principally the deep learning workshops and symposium at NIPS and ICML since 2007.