The goals of AI is to create a machine which can mimic a human mind and to do that it needs learning capabilities. But how does machine learning work?

Technically speaking, machine learning is all about testing, testing, testing. It starts with training data and then it applies predictions to what you might like. When you make a predicted selection, your answer is so noted.

Machine learning is a subfield of computer science that evolved from the study of pattern recognition and computational learning theory in artificial intelligence. Machine learning explores the study and construction of algorithms that can learn from and make predictions on data

what-is-machine-learning

Harry Brelsford, Director of Business Development, LeadScorz.

The goals of AI is to create a machine which can mimic a human mind and to do that it needs learning capabilities. However the goal of AI researchers are quite broad and include not only learning, but also knowledge representation, reasoning, and even things like abstract thinking. Machine learning on the other hand is solely focused on writing software which can learn from past experience. Machine learning is actually more closely related to data mining and statistical analysis than AI. (Gary Sims)

Machine Learning develops algorithms for making predictions from data. Machine Learning is about generalization and then making predictons about new data instances.

Professor Andrew Ng’s lectures for Machine Learning (CS 229) in the Stanford Computer Science department is very informative.

Professor Ng provides an overview of the course in this introductory meeting.

Professor Ng lectures on linear regression, gradient descent, and normal equations and discusses how they relate to machine learning.

Professor Ng delves into locally weighted regression, probabilistic interpretation and logistic regression and how it relates to machine learning

Professor Ng lectures on Newton’s method, exponential families, and generalized linear models and how they relate to machine learning.

Professor Ng lectures on generative learning algorithms and Gaussian discriminative analysis and their applications in machine learning.

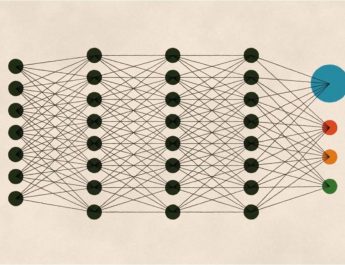

Professor Ng discusses the applications of naive Bayes, neural networks, and support vector machine.

Professor Ng lectures on optimal margin classifiers, KKT conditions, and SUM duals.

Professor Ng continues his lecture about support vector machines, including soft margin optimization and kernels.

Professor Ng delves into learning theory, covering bias, variance, empirical risk minimization, union bound and Hoeffding’s inequalities

Professor Ng continues his lecture on learning theory by discussing VC dimension and model selection.

Andrew Yan-Tak Ng is a Chinese American computer scientist. He is the chief scientist at Baidu Research in Silicon Valley. In addition, he is an associate professor in the Department of Computer Science and the Department of Electrical Engineering at Stanford University. Ng is also the co-founder and chairman of Coursera, an online education platform.

Ng researches primarily in machine learning and deep learning. His early work includes the Stanford Autonomous Helicopter project, which developed one of the most capable autonomous helicopters in the world, and the STAIR (STanford Artificial Intelligence Robot) project, which resulted in ROS, a widely used open-source robotics software platform.

Ng is also the author or co-author of over 100 published papers in machine learning, robotics, and related fields, and some of his work in computer vision has been featured in press releases and reviews. In 2008, he was named to the MIT Technology Review TR35 as one of the top 35 innovators in the world under the age of 35. In 2007, Ng was awarded a Sloan Fellowship. For his work in artificial intelligence, he is also a recipient of the Computers and Thought Award (2009). (wikipedia)